Johannes Brandstetter

Co-founder and Chief Scientist @ Emmi AI, Assistant Professor @ JKU Linz

Johannes Kepler University, Linz, Institute for Machine Learning

About me

I am leading a group “AI for data-driven simulations” at the Institute for Machine Learning at the Johannes Kepler University (JKU) Linz. Additionally, I am a Co-founder and Chief Scientist at Emmi AI - our push towards the data-driven revolution in science/engineering.

I have obtained my PhD after working several years at the CMS experiment at CERN. During this time, I had the privilege of learning from brilliant minds from all around the world, and got the chance to co-author seminal papers in the realm of Higgs boson physics. In 2018, after completing my PhD, my career trajectory shifted towards machine learning, and I was fortunate to join the research group of Mr LSTM Sepp Hochreiter in Linz. Under Sepp’s mentorship, I delved into the intricacies of machine learning and modern deep learning over a span of 2.5 years.

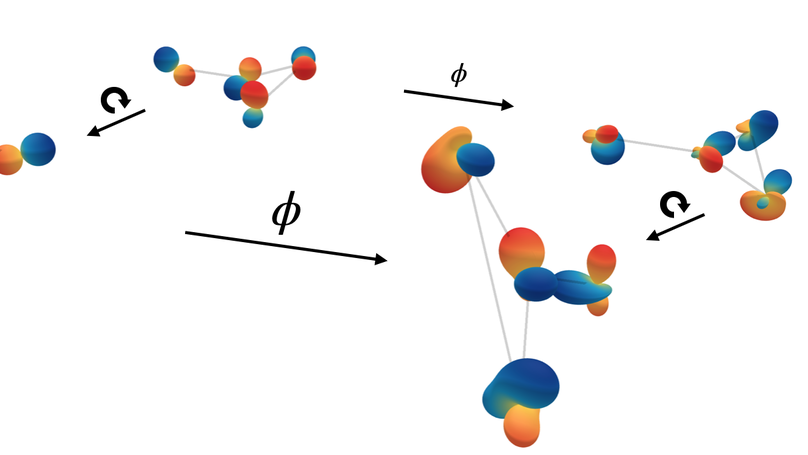

From 2021 to 2023, I had the pleasure of spending three remarkable years in Amsterdam. Initially, I was part of the Amsterdam Machine Learning Lab lead by Max Welling, and subsequently joined Microsoft Research for 2 years. During this period, my passion for Geometric Deep Learning, particularly involving Geometric (Clifford) algebras, and my interest in partial differential equations (PDEs), with a particular focus on developing neural surrogates for (PDEs), became profound. Most importantly, I pivoted towards large-scale PDEs, including weather and climate modeling, which culminated in Aurora.

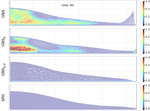

My years in Amsterdam have shaped my research vision. I am firmly convinced that AI is on the cusp of disrupting simulations at industry-scale. Every day thousands and thousands of compute hours are spent on turbulence modeling, simulations of fluid or air flows, heat transfer in materials, traffic flows, and many more. Many of these processes follow similar underlying patterns, but yet need different and extremely specialized softward to simulate. Even worse, for different parameter settings the costly simulations need to be run at full length from scratch.

This is what I want to change! Therefore, I have started a new group at JKU Linz which has strong computer vision, numerical simulation, and engineering components. We want to advance data-driven simulations at industry-scale, and place the Austrian industry engine Linz as a center for doing that.

Emmi AI

About Emmi AI

At Emmi AI, we believe that the next frontier of industrial engineering lies at the intersection of artificial intelligence and physics. The world of large-scale engineering and manufacturing is ripe for transformation, yet today’s innovation processes are hindered by slow, costly simulations and prohibitive computational requirements.

Emmi AI is here to change that.

Our vision is bold: to pioneer universally applicable AI-driven physics simulation models that accelerate engineering and manufacturing processes across industries – from aviation and energy to semiconductors, automotive and chemicals. We aim to enable real-time interaction, streamline production planning, and unlock new possibilities in design optimization.

The future we envision is one where AI surrogates reduce computational costs, foster rapid innovation, and drive industrial-scale engineering at unprecedented speeds. Our starting point is computational fluid dynamics (CFD), but our ambition extends to real-time adaptive simulations and multi-physics models.

Industrial-scale problems demand industrial-scale solutions. Much like medium-range weather forecasting challenged deep learning due to its scale, industrial simulations with tens of millions of mesh cells present a similar challenge – one we are ready to tackle. We are inspired by the ambition and intellectual rigor of Emmy Noether, whose groundbreaking contributions to physics mirror our commitment to redefining industrial engineering.

Emmi AI is not just building models; we are building the future of industrial innovation. As we scale, we seek partners who share our conviction: that AI is not merely a tool, but a catalyst for transforming how we design, build, and innovate.

To our investors, collaborators, and future team members – join us as we push the boundaries of what’s possible. Together, we will unlock a new era of efficiency, creativity, and excellence in engineering.

Let’s build the future!

More information can be found at our webpage https://www.emmi.ai/.

Research

I am firmly convinced that AI is on the cusp of disrupting simulations at industry-scale. Therefore, I have started a new group at JKU Linz which has strong computer vision, simulation, and engineering components. My vision is shaped by experience both from university and from industry.

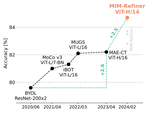

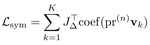

My passion for Geometric Deep Learning can be unmistakenly traced back to my physics background. I have contributed to the fields of graph neural networks, equivariant architectures, and neural PDE solvers. Furthermore, I have lead efforts to introduce Lie Point Symmetries, and, most recently, Clifford (Geometric) Algebras into the Deep Learning community.

After switching from High Energy Physics to Deep Learning, I started working in Reinforcement Learning before pivoting towards Associative Memories and modern Transformer networks. Recent years have shown that scalable ideas, improving the datasets, and clever engineering are the ingredients for ever better Deep Learning models. This totally coincides with my experience, and – needless to say – I will continue working on general large-scale Deep Learning directions.

Recent (selected) Publications

Open Positions

[Nov 2023] Open positions can be found here.